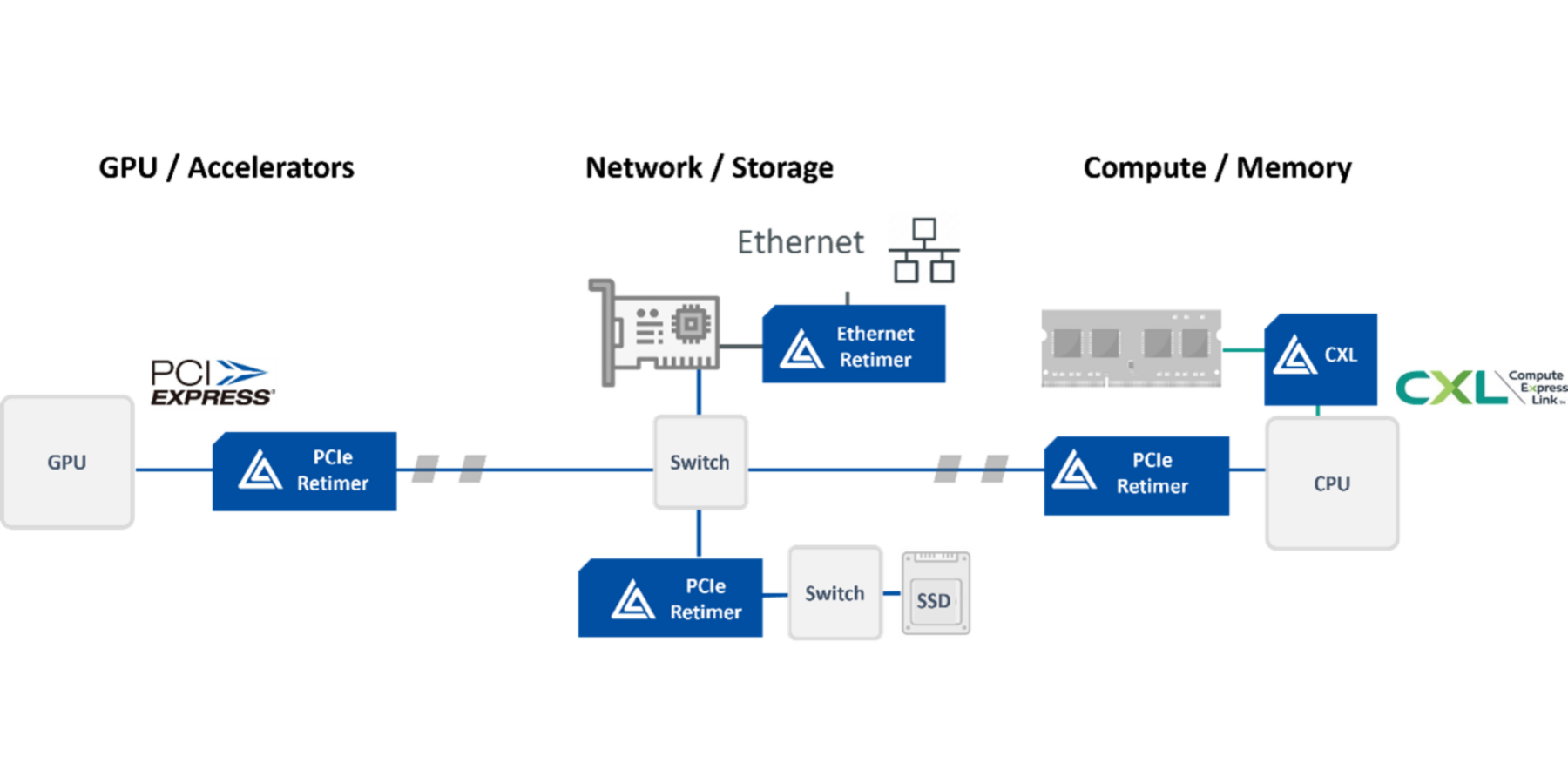

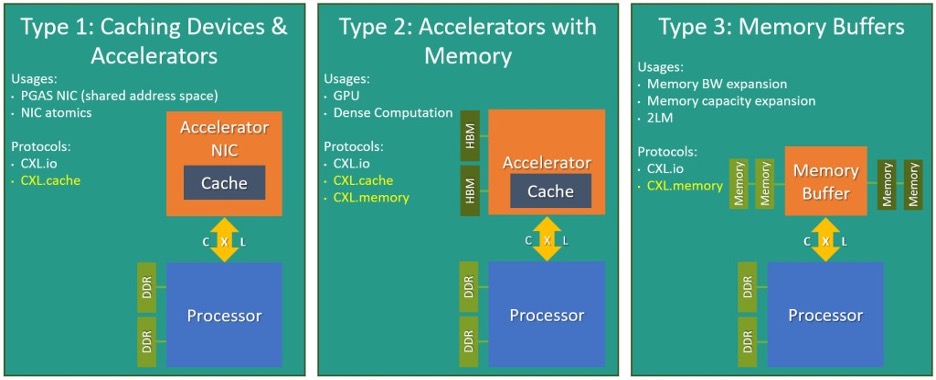

Meeting compliance standards and supporting plug-and-play interoperability are critical, not just for Astera Labs and our customers, but also for the continued success of the AI and Cloud Infrastructure ecosystem. The CXL Consortium’s compliance test events provide consortium members with a venue to collaborate and test the functionality and interoperability of end-products, which enables end customers to seamlessly deploy CXL solutions.

We achieved a significant milestone with Leo CXL Smart Memory Controller, which successfully passed the rigorous CXL 1.1 compliance testing at the CXL Consortium Compliance Test Event in October 2023. Our Leo memory controller and A1000 card passed the demanding CXL 1.1 Compliance Verification (CV) tests, Physical Layer tests, and Protocol suite tests. As a result, our Leo CXL Smart Memory Controller and A1000 add-in card were added to the CXL Consortium Integrators List in October 2023.

Dr. Joachim Peerlings, Vice President of Network and Data Center Solutions, Keysight said, “Keysight collaborated closely with Astera Labs to facilitate testing of their Leo CXL Smart Memory Controllers. These controllers play a crucial role in accelerating AI and cloud infrastructure with memory expansion enabling robust CXL Link capabilities. This achievement will accelerate industry adoption of this important high-speed interconnect for coherent sharing of memory between computing devices. This development was enabled by Keysight’s PCIe 6.0 / CXL Protocol Analyzer and Exerciser.”

Joe Mendolia, Vice President, PSG Marketing, Teledyne LeCroy said, “Teledyne LeCroy congratulates Astera Labs for the Leo CXL Smart Memory Controller portfolio’s inclusion on the CXL Consortium Integrators List. This milestone was achieved through the use of our industry leading CXL protocol analyzer and exerciser and the result of our deep collaboration on CXL protocol testing demonstrating that Leo is ready for robust, real-world CXL deployments as the ecosystem starts to emerge.”

The Road Ahead: Building on Compliance with the Cloud-Scale Interop Lab

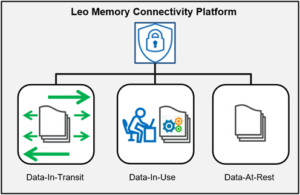

At Astera Labs, we don’t simply stop at compliance. In our Cloud-Scale Interop Lab, we thoroughly tested Leo’s hardware and firmware with a diverse array of CXL hosts, memory devices, and operating systems. We conducted a battery of tests from the physical layer to the system level in real-world use cases to simulate large-scale cloud deployments. These tests ensure the ability of our Leo CXL Smart Memory Controllers to withstand the rigors of demanding heterogeneous data center environments.

Cloud-Scale Interop Lab Test Suite

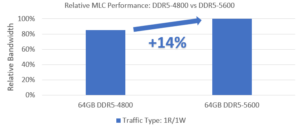

Leo’s entry into the CXL Consortium Integrators List combined with rigorous supplemental validation in our Cloud-Scale Interop Lab with DDR5-5600 memory modules is a strong testament of robustness, forged through deep partnerships with multiple vendors, to ensure interoperability across increasingly diverse CXL architectures. This translates to faster time-to-market and reduced deployment costs for our customers.

We invite you to download our Leo Interop Reports today for more details.