At DesignCon, we showcased our new Aries PCIe®/CXL® Smart Cable Modules (SCMs) that enable multi-rack GPU clustering for AI with an industry-leading 7-meter reach over flexible copper cables.

Videos

Astera Labs Video Library

Videos

Aries PCIe®/CXL® Smart Cable Modules™: First Look Demo

Get your first look at an end-to-end demonstration of our Aries PCIe/CXL Smart Cable Modules (SCMs) that enable multi-rack GPU clustering for AI with an industry-first 7 meters reach over flexible copper cables.

Articles & Insights

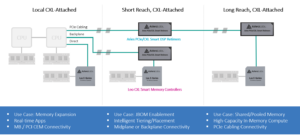

Astera Labs’ Flexible CXL Product Suite Enables Low-Latency Memory Expansion

Artificial intelligence (AI) is the single most transformative technology impacting everyday lives. Data-intensive AI applications as well as in-memory databases, high performance computing (HPC) and high-performance file systems are driving the need for faster interconnects between CPUs, GPUs, TPUs, DPUs, SmartNICs and FPGAs. Low latency is also critical, especially for memory interconnects. Compute Express Link™… Read More »

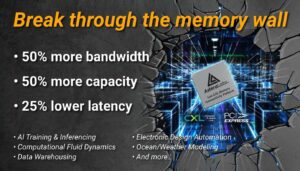

Breaking Through the Memory Wall

The term “memory wall” was first coined in 1994 to define what was becoming an obvious problem at the time: processor performance was outpacing memory interconnect bandwidth. In other words, memory access was limiting compute performance. Almost 30 years later this statement still holds true, especially in memory-intensive applications such as artificial intelligence (AI) where… Read More »

Astera Labs Delivers Industry-First CXL Interop with DDR5-5600 Memory Modules

Earlier this year, we announced the launch of our Cloud-Scale Interop Lab for CXL to provide robust interoperability testing between our Leo Memory Connectivity Platform and a growing ecosystem of CXL supported CPUs, memory modules and operating systems. By providing this critical testing, we enable customers to deploy CXL-attached memory with confidence by minimizing interoperational… Read More »

Three Things to Know about Astera Labs’ Taurus Ethernet Smart Cable Module Diagnostic Capabilities

Today’s data centers are under pressure to keep up with the ever-increasing demand for data processing, transfer, and storage. This is especially true with the advent of generative artificial intelligence (AI) and the continued investments by more than 97% of organizations in big data and AI initiatives[1]. To keep this data moving and easily accessible,… Read More »

- « Previous

- 1

- 2

- 3

- Next »