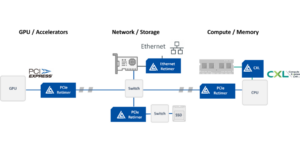

At DesignCon, we showcased our new Aries PCIe®/CXL® Smart Cable Modules (SCMs) that enable multi-rack GPU clustering for AI with an industry-leading 7-meter reach over flexible copper cables.

Videos

Astera Labs Video Library

Videos

Aries PCIe®/CXL® Smart Cable Modules™: First Look Demo

Get your first look at an end-to-end demonstration of our Aries PCIe/CXL Smart Cable Modules (SCMs) that enable multi-rack GPU clustering for AI with an industry-first 7 meters reach over flexible copper cables.

Articles & Insights

The Generative AI Impact: Accelerating the Need for Intelligent Connectivity Solutions

We have entered the Age of Artificial Intelligence and Generative AI is developing at a rapid pace and becoming integral to our lives. According to Bank of America analysts, “just as the iPhone led to an explosion in the use of smartphones and phone apps, ChatGPT-like technology is revolutionizing AI”.[1] Generative AI is changing every… Read More »

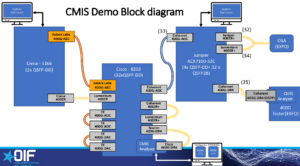

3 Key Takeaways from the Optical Fiber Communications (OFC) Conference and Expo

Earlier this month, Astera Labs participated in the largest-ever multi-vendor interoperability demo hosted by the Optical Internetworking Forum (OIF) where more than 30 member companies came together to showcase next-generation control management, electrical, and optical technologies. At the show, Astera Labs demonstrated compliance of its Taurus Ethernet Smart Cable Modules™ (SCM) with the OIF’s Common… Read More »

Let’s Get Real with CXL at MemCon!

Join us at this industry-first memory event focused on end users and systems, taking place Tuesday March 28 through Wednesday March 29 at the Computer History Museum in Mountain View, California. In today’s data centers, the exponential growth in Artificial Intelligence and Machine Learning applications is driving the need for a significant increase in memory…. Read More »

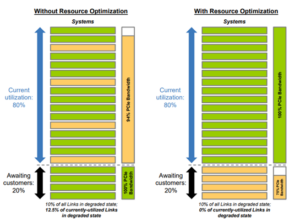

Cloud-Scale Infrastructure Fleet Management Made Easy with Aries Smart Retimers

Data centers today have a lot of servers, and within each server there is an abundance of storage, specialized accelerators, and networking/communications infrastructure. These represent tens of thousands of interconnected systems, and with the rise of hyperscalers and cloud service providers, the scale of data infrastructure is only expected to grow in the years to… Read More »

- « Previous

- 1

- 2

- 3