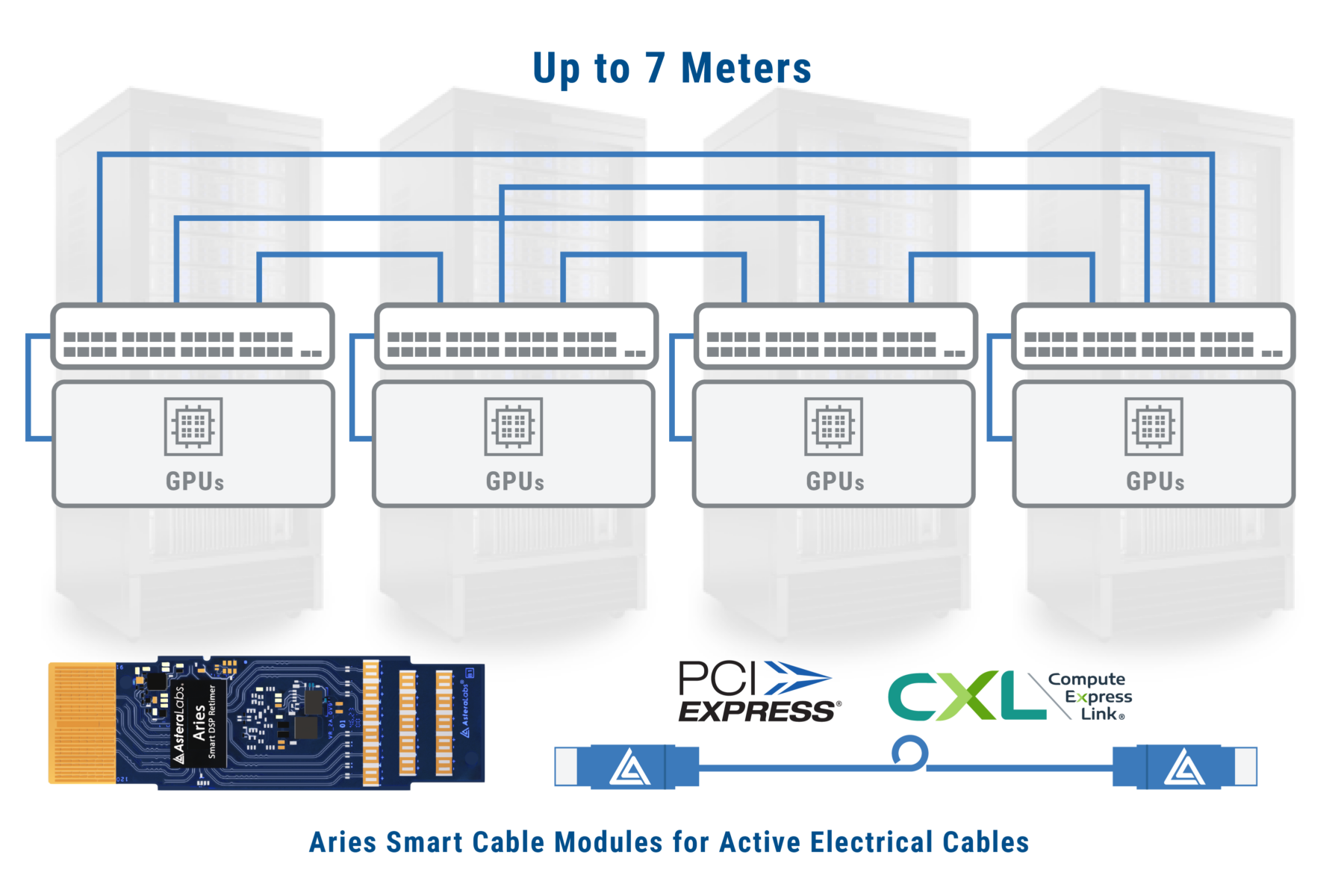

Multi-rack GPU Clustering

Delivering high data throughput for larger AI clusters with multi-rack PCIe connectivity

Requirements and Challenges

- Larger clusters of GPUs with high performance connectivity are required to address the growing complexity and size of AI models

- AI infrastructure must scale GPU clusters across several racks as each rack can only accommodate a limited number of GPUs due to space, power and thermal management constraints

- Passive PCIe DACs can only support up to ~3 meters for PCIe 5.x, limiting the number of GPUs in a cluster that can be connected in a single rack

- Clustering with passive PCIe DACs and general-purpose AECs lack advanced cable and fleet management features that are essential for managing data center infrastructure

Aries Smart Cable Module (SCM) Benefits

- Purpose-built for demanding GPU clusters and delivers robust signal integrity and link stability over long distances

- Improves cable routing and rack air flow with low power active electrical cables (AEC) using thin copper cables to scale GPU clusters within and across racks

- Supports reliable PCIe 5.x reach extension up to 7 meters to enable larger GPU clusters in a multi-rack architecture

- Real-time lane, link and device health monitoring and management features with Astera Labs’ COSMOS suite that provides comprehensive diagnostics and advanced Link, Fleet and RAS management for cloud-scale deployments