Read more

Read more Accelerating Database Performance with Leo: FMS 2023

See how CXL® memory enhances OLTP solutions with increased transaction throughput, reduced infrastructure costs, and improved user experience.

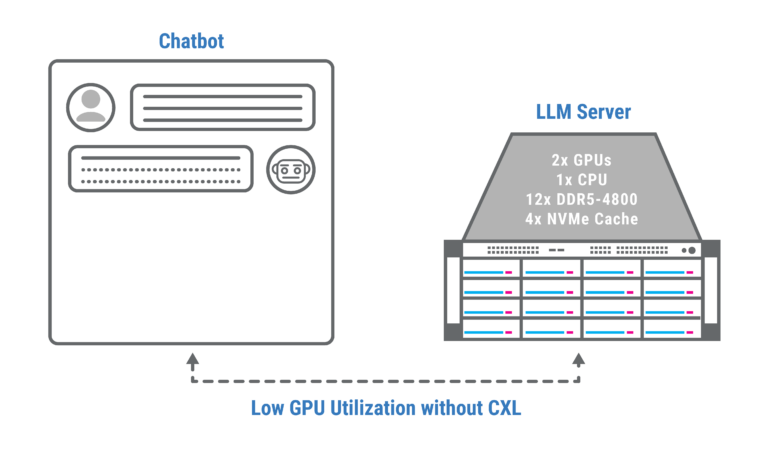

Challenge:

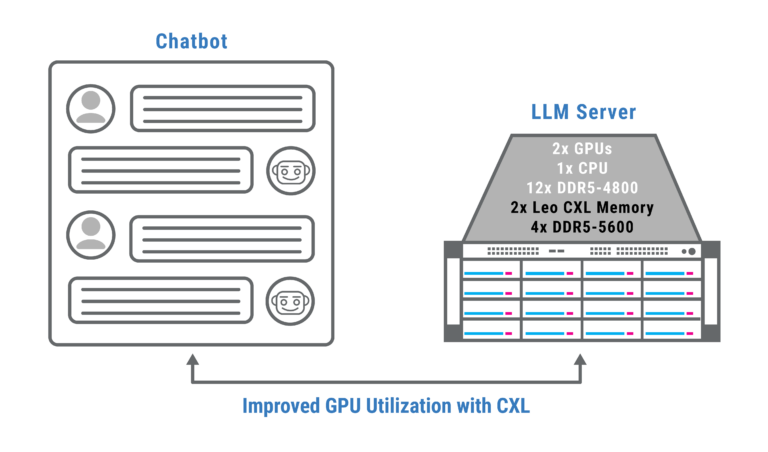

Solution: